Word Embeddings

A Journey to Low-Dimensional Word Vector Space

@ uberalex

You can see my notes and details here. Please do get in touch if you have any comments or questions.

The quick, brown fox jumped over the lazy dog.

Before we start some very simple basics about language as data. Normally when we think about language data, it's a corpus of documents. Each document has paragraphs, which are groups of sentences. The sentences themselves are made up of words, the punctuation between the words.

A plot of the rank versus frequency for the first 10 million words in 30 Wikipedias (dumps from October 2015) in a log-log scale.

The Distributional Hypothesis

"a word is characterized by the company it keeps"

We can only learn the meaning of words from their asssociation with other words.

Firth, J.R. (1957). A synopsis of linguistic theory 1930-1955. In

Studies in Linguistic Analysis, pp. 1-32. Oxford: Philological

Society. Reprinted in F.R. Palmer (ed.), Selected Papers of J.R.

Firth 1952-1959, London: Longman (1968).

Harris, Z. (1954). Distributional structure. Word, 10(23): 146-162.

A Word Embedding is a parameterised function that maps words from a vocabulary to lower-dimension vectors of real weights

Image Source: https://www.tensorflow.org/versions/0.6.0/tutorials/word2vec/index.html

Word2Vec (Mikolov et al.)

Maximise P(Vout|Vin) by learning the softmax probability via gradient descent.

Operations on the embedding vector space

Use it to expand queries, find synonyms, disambiguate terms, group items

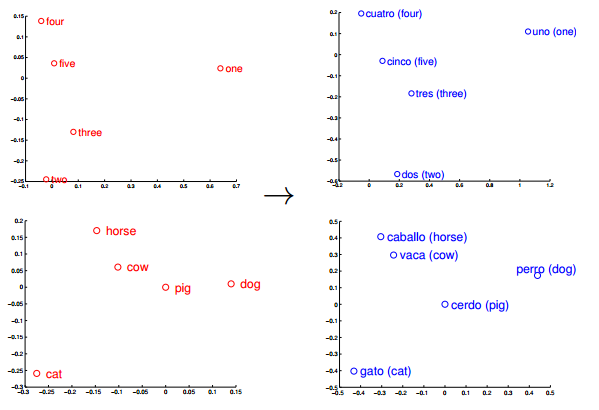

Learn Monolingual Word Embeddings from lots of text

Use a small bilingual dictionary to learn the linear projection

Project unknown word from source embedding to target

Mikolov et. al, Exploiting Similarities among Languages for Machine Translation

Over to You

Need lots of pre-processed text, so pre-trained models can be a good place to start.

Gensim (Python), GloVe,WEM (R)

Making Sense of Word2Vec,Levy & Goldberg 'Linguistic Regularities in Sparse and Explicit Word Representations.'

A lot of people use the google newsgroups data.